What is ElasticSearch?

Elasticsearch is an open-source, restful, distributed, search engine built on top of apache-lucene, Lucene is arguably the most advanced, high-performance, and fully featured search engine library in existence today—both open source and proprietary. Elasticsearch is also written in Java and uses Lucene internally for all of its indexing and searching, but it aims to make full-text search easy by hiding the complexities of Lucene behind a simple, coherent, RESTful API.

Basic Concept and terminologies:

1.Near Realtime (NRT) Elasticsearch is a near real time search platform. What this means is there is a slight latency (normally one second) from the time you index a document until the time it becomes searchable.2.Cluster A cluster is a collection of one or more nodes (servers) that together holds your entire data and provides federated indexing and search capabilities across all nodes. default cluster name will be "elasticsearch".

3.Node A node is a single server that is part of your cluster, stores your data, and participates in the cluster’s indexing and search capabilities.

4.Index An index is a collection of documents that have somewhat similar characteristics i.e like database.

5.Type Within an index, you can define one or more types. A type is a logical category/partition of your index and defined for documents that have a set of common fields. i.e. like table in relational database. a type

6.Document A document is a basic unit of information that can be indexed. For example

Below image will show how we can co-relate the relational database with elastic index which will make easy to understand the elastic terms and api.

In Elasticsearch, a document belongs to a type, and those types live inside an index. You can draw some (rough) parallels to a traditional relational database:

Relational DB ⇒ Databases ⇒ Tables ⇒ Rows ⇒ Columns

Elasticsearch ⇒ Indices ⇒ Types ⇒ Documents ⇒ Fields

<dependency> <groupId>org.elasticsearch</groupId> <artifactId>elasticsearch</artifactId> <version>2.1.1</version> </dependency>

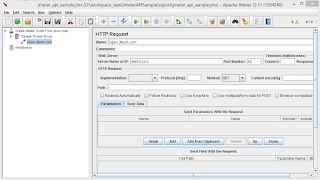

Client: using java client we can performe operations on elastic search cluster/node.

1.Perform standard index, get, delete and search operations on an existing cluster

2.Perform administrative tasks on a running cluster

3.Start full nodes when you want to run Elasticsearch embedded in your own application or when you want to launch unit or integration tests

2. TransportClient.

Node Client: Instantiating a node based client is the simplest way to get a Client that can execute operations against elasticsearch. TransportClient: The TransportClient connects remotely to an Elasticsearch cluster using the transport module. It does not join the cluster, but simply gets one or more initial transport addresses and communicates with them. sample elastic search crud sample code: Node Client:

Node node = NodeBuilder.nodeBuilder().clusterName("yourclustername").node(); Client client = node.client();TransportClient:

Settings settings = Settings.settingsBuilder() .put(ElasticConstants.CLUSTER_NAME, cluster).build(); TransportClient transportClient = TransportClient.builder().settings(settings).build(). addTransportAddress(new InetSocketTransportAddress(InetAddress.getByName(host), port));Creat Index: We can create the IndexRequest or using XContentBuilder we can populate the request to store in the index.

XContentBuilder jsonBuilder = XContentFactory.jsonBuilder(); Map<String, Object> data = new HashMap<String, Object>(); data.put("FirstName", "Uttesh"); data.put("LastName", "Kumar T.H."); jsonBuilder.map(data);

public IndexResponse createIndex(String index, String type, String id, XContentBuilder jsonData) { IndexResponse response = null; try { response = ElasticSearchUtil.getClient().prepareIndex(index, type, id) .setSource(jsonData) .get(); return response; } catch (Exception e) { logger.error("createIndex", e); } return null; }Find Document By Index:

public void findDocumentByIndex() { GetResponse response = findDocumentByIndex("users", "user", "1"); Map<String, Object> source = response.getSource(); System.out.println("------------------------------"); System.out.println("Index: " + response.getIndex()); System.out.println("Type: " + response.getType()); System.out.println("Id: " + response.getId()); System.out.println("Version: " + response.getVersion()); System.out.println("getFields: " + response.getFields()); System.out.println(source); System.out.println("------------------------------"); } public GetResponse findDocumentByIndex(String index, String type, String id) { try { GetResponse getResponse = ElasticSearchUtil.getClient().prepareGet(index, type, id).get(); return getResponse; } catch (Exception e) { logger.error("", e); } return null; }Find Document By Value

public void findDocumentByValue() { SearchResponse response = findDocument("users", "user", "LastName", "Kumar T.H."); SearchHit[] results = response.getHits().getHits(); System.out.println("Current results: " + results.length); for (SearchHit hit : results) { System.out.println("--------------HIT----------------"); System.out.println("Index: " + hit.getIndex()); System.out.println("Type: " + hit.getType()); System.out.println("Id: " + hit.getId()); System.out.println("Version: " + hit.getVersion()); Map<String, Object> result = hit.getSource(); System.out.println(result); } Assert.assertSame(response.getHits().totalHits() > 0, true); } public SearchResponse findDocument(String index, String type, String field, String value) { try { QueryBuilder queryBuilder = new MatchQueryBuilder(field, value); SearchResponse response = ElasticSearchUtil.getClient().prepareSearch(index) .setTypes(type) .setSearchType(SearchType.QUERY_AND_FETCH) .setQuery(queryBuilder) .setFrom(0).setSize(60).setExplain(true) .execute() .actionGet(); SearchHit[] results = response.getHits().getHits(); return response; } catch (Exception e) { logger.error("", e); } return null; }Update Index

public void UpdateDocument() throws IOException { XContentBuilder jsonBuilder = XContentFactory.jsonBuilder(); Map<String, Object> data = new HashMap<String, Object>(); data.put("FirstName", "Uttesh Kumar"); data.put("LastName", "TEST"); jsonBuilder.map(data); UpdateResponse updateResponse = updateIndex("users", "user", "1", jsonBuilder); }

public UpdateResponse updateIndex(String index, String type, String id, XContentBuilder jsonData) { UpdateResponse response = null; try { System.out.println("updateIndex "); response = ElasticSearchUtil.getClient().prepareUpdate(index, type, id) .setDoc(jsonData) .execute().get(); System.out.println("response " + response); return response; } catch (Exception e) { logger.error("UpdateIndex", e); } return null; }Remove Index:

public void RemoveDocument() throws IOException { DeleteResponse deleteResponse = elastiSearchService.removeDocument("users", "user", "1"); } public DeleteResponse removeDocument(String index, String type, String id) { DeleteResponse response = null; try { response = ElasticSearchUtil.getClient().prepareDelete(index, type, id).execute().actionGet(); return response; } catch (Exception e) { logger.error("RemoveIndex", e); } return null; }Full sample code is available at guthub Download full code